While organizations invest millions in AI transformation, pursuing automation, productivity, and returns, a quieter crisis is unfolding beneath the surface. Employees are drowning in AI anxiety, leading to increased levels of technostress, feelings of overwhelm, and FOBO – the fear of becoming obsolete.

The pressure to constantly adapt, learn, and “keep up” with AI is eroding wellbeing at a scale few leaders fully grasp. Layoff anxiety is only the tip of the iceberg that consists of chronic uncertainty, doubts, incapabilities, and a sense of never being “up to date” to stay relevant.

This growing strain affects organizational performance, weakening productivity, slowing transformation efforts, and ultimately putting revenue and competitiveness at risk.

In this article, we look at how HR can protect employee wellbeing amid the technostress resulting from AI anxiety and the constant drive for employees to adapt.

Contents

The human impact of AI: Understanding AI anxiety and technostress

The role of HR in managing AI anxiety and technostress

Four actions HR can take to reduce technostress and protect wellbeing

The human impact of AI: Understanding AI anxiety and technostress

Digital fatigue and overwhelm threaten employee wellbeing and productivity. Nearly one in three people feels overloaded by digital devices and subscriptions. Meanwhile, 60% of people with high screen time worry about the emotional and physical toll of the digital world.

The rapid rise of AI has worsened these concerns. 71% of U.S. workers familiar with AI express anxiety about its effects. A study of 1,606 employees found that concerns about AI, such as job loss and career insecurity, are strongly linked to lower performance and wellbeing. These anxieties reflect deeper worries about displacement, loss of autonomy, and keeping up with evolving job responsibilities.

When anxiety rises, organizations feel the impact through reduced focus, slower adoption of new tools, and overall declines in productivity and work quality.

Instead of thinking about AI as “support in your pocket,” we should ask what it can actually do for people. At an event I went to, nearly every stand claimed to be AI-enabled, but I kept wondering: what problem are we really trying to solve? If your product uses AI, will it actually address the fact that employees working on a laptop are distracted every two minutes, according to Microsoft’s latest Future of Work report? It takes about 25 minutes to reach a state of deep focus, and we’re distracted every two minutes…

It’s not an organization’s responsibility to improve happiness, and that’s where a lot of apps and quick fixes miss the mark. But it is their responsibility not to make things worse. And when we flip that switch, we stop looking at the individual and giving them shiny tools to deal with and actually look at the workplace itself. Instead, we ask, how can we let the smart humans do the job that they were brought in to do with the minimal amount of distractions?

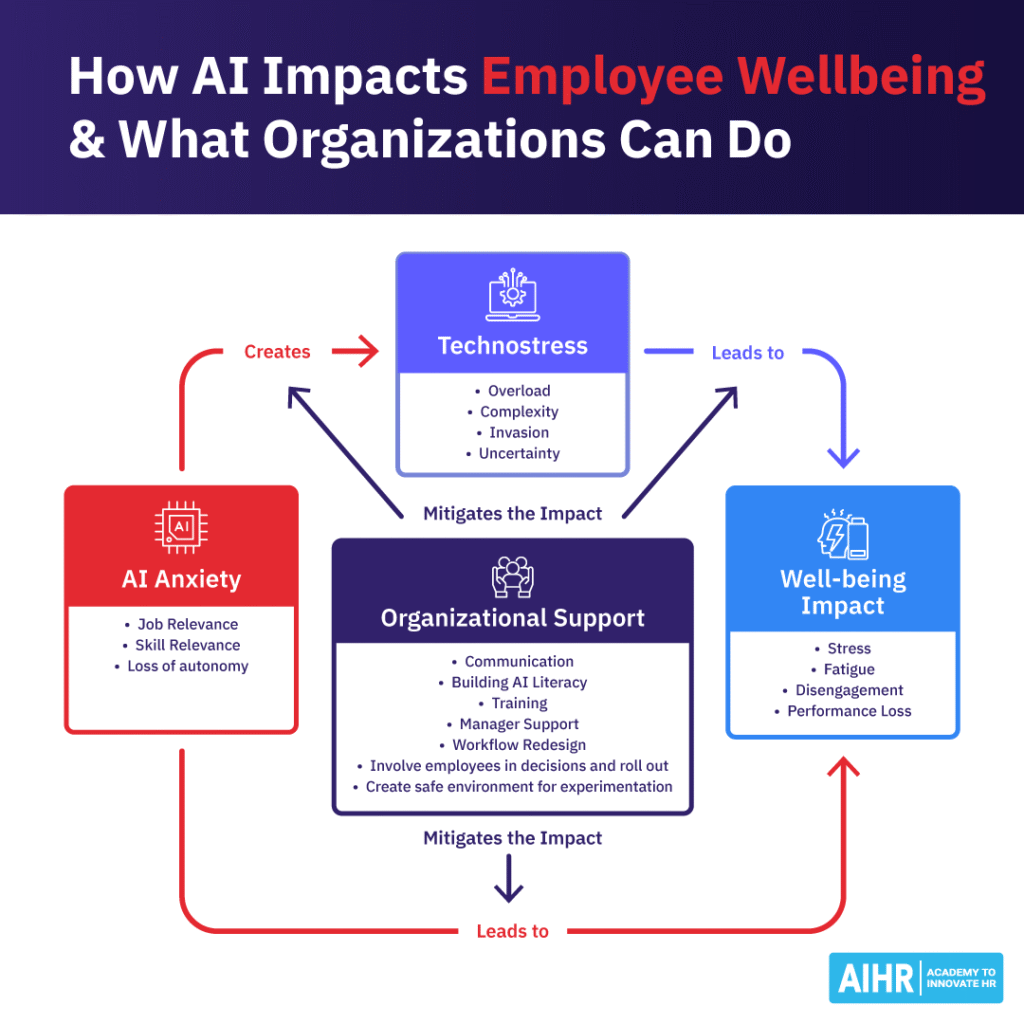

AI anxiety is emerging as a central driver of technostress. Employees encounter numerous AI tools, but often lack the time to learn how to work with them effectively. This creates an “always-on” culture that blurs work-life boundaries. It also brings complexity, as fast-paced learning outstrips employees’ readiness.

Concerns about job security, skill relevance, loss of autonomy, or the pace of change serve as the psychological spark that activates the four classic technostress creators: overload, complexity, invasion, and uncertainty.

When employees feel threatened or underprepared, these technostress creators produce cognitive strain, emotional fatigue, and ultimately, declines in wellbeing and performance.

Importantly, evidence shows that organizational support can disrupt this cycle. Transparent communication, accessible skill-building opportunities, and attentive leadership significantly weaken the link between AI anxiety and technostress.

These forms of support also buffer the downstream effects of technostress on wellbeing, shifting employee responses from fear and resistance toward confidence, capability, and engagement.

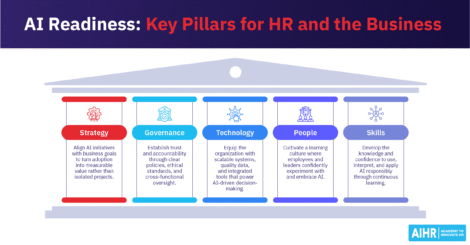

Organizations should treat AI anxiety and technostress as strategic risks to successful transformation. Left unaddressed, they slow implementation, erode trust, and undermine productivity.

The role of HR in managing AI anxiety and technostress

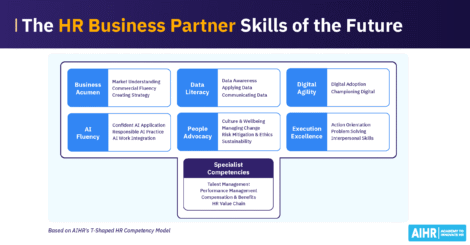

HR plays a critical role in enabling leaders to make responsible, people-centered decisions about AI adoption. Many leaders focus on efficiency and performance gains without fully understanding the workforce implications of how AI reshapes workloads, alters autonomy, or introduces new sources of stress.

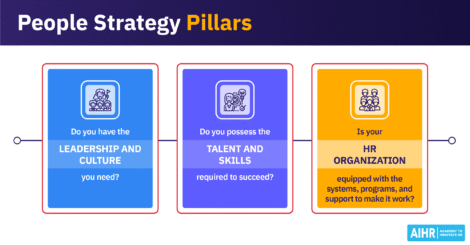

Here’s where HR’s impact lies:

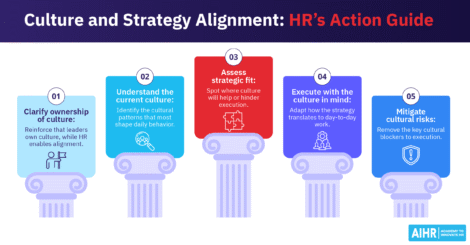

- Strategic guidance for leaders: HR must help leaders look beyond efficiency gains and understand how AI reshapes workloads, autonomy, and stress, guiding them toward decisions that balance innovation with employee wellbeing.

- Clear frameworks for responsible AI use: HR should equip leaders with structured ways to evaluate AI use cases, identify where human judgment remains essential, and anticipate how roles and capabilities will need to evolve.

- Psychological safety for experimentation: HR needs to create an environment where employees feel safe to explore AI, ask questions, and make mistakes. This can be achieved by utilizing learning spaces, such as AI labs or low-stakes testing sessions, to build confidence rather than fear. When employees are encouraged to test, challenge, and provide feedback on AI tools, adoption becomes a shared journey instead of a top-down mandate.

- Communication and support structures: HR must embed transparent communication, prepare managers to spot early signs of digital fatigue, explain the purpose and limitations of AI tools, and maintain open feedback channels for continuous improvement and real-time adjustments.

To summarize, HR can offer strategic guidance to leaders, promote psychological safety for employees, and facilitate communication that fosters trust. This ultimately creates an environment where AI adoption and employee wellbeing can coexist.

As AI becomes more embedded in HR and the broader organization, it’s critical to balance innovation with empathy. From workload automation to personalized experiences, HR leaders must be mindful of AI’s impact on employee wellbeing, trust, and inclusion.

With AIHR’s AI for HR Boot Camp, your team will:

✅ Build AI fluency to lead responsible, employee-minded innovation

✅ Explore practical applications of AI across the employee life cycle

✅ Develop a responsible, business-aligned approach to AI adoption.

🎯 Equip your HR team to lead AI adoption with a people-first mindset.

Four actions HR can take to reduce technostress and protect wellbeing

From a practical perspective, HR should prioritize four key actions as a starting point in creating a safe and trusted environment for AI adoption.

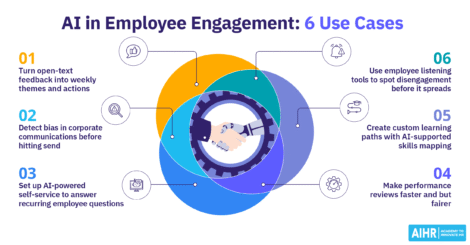

1. Build AI literacy and confidence

As we’ve already mentioned, employees are more likely to adopt AI when they can experiment without fear of making mistakes. That involves building AI literacy, which is about helping people understand what AI is, how it works, and where it can be applied responsibly and effectively in everyday work.

HR can create this environment, for example, by establishing AI learning labs where employees can explore tools in short, guided sessions.

The focus should be on confidence, not on compliance. Instead of mandating adoption targets, organizations can encourage employees to share what they discovered, what didn’t work, and how tools could be applied to their roles. This reduces anxiety, normalizes learning, and builds capability from the ground up.

2. Redesign work with wellbeing in mind

AI reshapes workloads, not just workflows, and HR must assess both. For instance, automating report generation may save time, but if leadership interprets this as “capacity gained” and increases output expectations, burnout will rise rather than fall.

Practical steps include:

- Conducting workload impact assessments before rollout

- Observing whether AI reduces or redistributes effort in teams

- Reallocating tasks so employees spend freed-up time on strategic or meaningful work.

We talked about AI and employee wellbeing with strategic wellbeing leader and author Ryan Hopkins. Watch the full interview below:

3. Own the narrative and make it human-centered

Employees need clarity, not hype. HR should proactively communicate the reasons behind introducing AI, the problems it addresses, and how roles will evolve. This narrative-building is essential for maintaining trust.

Leaders also need coaching to recognize the signs of digital fatigue, for instance, reduced responsiveness, irritability, declining quality, or repeated mistakes. A manager who notices these early can adjust workloads, review tooling complexity, or offer support, preventing deeper burnout.

4. Embed wellbeing metrics into AI rollouts

To ensure AI enhances rather than erodes the employee experience, wellbeing must become part of the measurement architecture. HR can:

- Add stress levels, autonomy, clarity, and engagement to KPIs for all AI initiatives

- Use short pulse surveys during key rollout stages to capture real-time sentiment

- Monitor whether AI is reducing administrative burden or inadvertently increasing pace and volume.

Final words

The rise of AI presents a critical moment for organizations. While the promise of efficiency is excellent, the human cost, manifested as AI anxiety and technostress, is a strategic risk that HR can no longer afford to ignore.